ReHand

During its second phase (2014-2018), NCCR Robotics developed and tested several components aimed at restoring the human functions naturally used to control grasping. This work included new approaches to decode grasping commands using cortical and muscular signals, new mechanisms for hand exoskeletons and shared-control solutions to cope with the limited information that can be decoded on user intentions.

Labs involved: R.Gassert, J. Paik, A. Billard, R. Riener, S. Micera, S. Lacour

Hand exoskeletons

NCCR Robotics has supported the development of two hand exoskeletons.

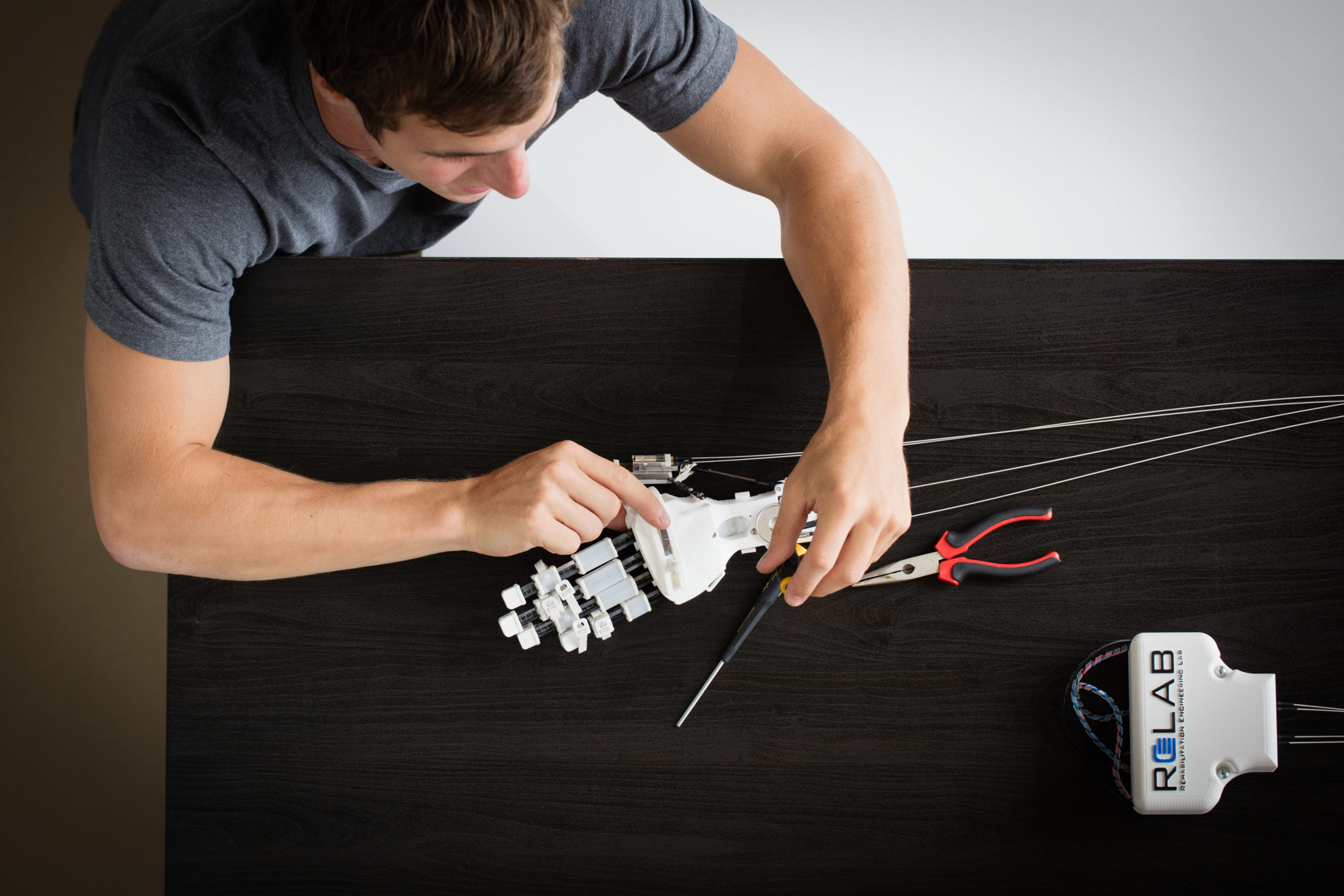

At ETH, the result is tenoexo, a compact and lightweight hand exoskeleton. The EMG-controlled device assists patients with moderate to severe hand motor impairment during grasping tasks in rehabilitation training and during activities of daily living. Its soft mechanism allows for grasping of a variety of objects. Thanks to 3D-rapid prototyping, it can be tailored to the individual user.

At EPFL, the work resulted in mano, a novel hand exoskeleton with the main purpose of assisting independence and promoting intensive use, both within clinical and domestic settings. The system was iteratively designed, developed and tested in collaboration with partner clinics, including healthcare professionals (such as neurologists, occupational therapists, ergotherapists, physiotherapists), and with users who suffered from motor disabling conditions (such as e.g. spinal-cord injuries, amyotrophic lateral sclerosis, cerebrovascular accidents and cerebral palsies). The project is now being carried forward in Auke Ijspeert’s lab.

Brain-computer interfaces

Between 2014 and 2018, NCCR robotics researchers worked on perfecting brain-computer interfaces (BCI) that can be used to control soft exoskeletons such as the one developed for restoring hand functions.

In particular, José Millàn’s group at EPFL studied the right mix of information and electrical stimulation to facilitate patients in learning how to control an external robotic device.

Two end-user participants for the Cybathlon BCI race demonstrated that mutual learning (where the subject and the pattern recognition system learn from each other) is a critical factor for successful BCI translational applications.

Another goal was to create Brain-Computer Interfaces that can provide combine wearable vibrotactile stimulation vibrotactile stimulations using novel soft pneumatic actuators (SPAs).

Most BCIs only use visual or acoustic information, but multi-sensory feedback is vital for more efficient BCIs.